Artificial Intelligence is transforming the world we know on a daily basis. But, as we trust it to make more and more choices, what if we don’t like the decisions that are made? What happens when AI denies us something and we ask for an explanation?

After all, businesses have to explain their reasoning or logic and using AI doesn’t circumvent them from this fact. For the most part, customers are happy when they get the results they want, but what happens when applications are denied – and the same clients ask for an explanation?

The State of AI

AI is opening opportunities to increase productivity, innovate and transform the operating models in various industries. Even today, it already has a significant impact on industries such as finance, healthcare, manufacturing and autonomous driving (the latter being an entire industry that wasn’t possible without AI), to name just a few.

One such example of the disruptive expansion of AI is trading. According to Art Hogan, the Chief Marketing Strategist for B. Riley FBR, robots carry out somewhere between 50% to 60% of Wall Street’s buy and sell orders.

Future predictions for AI also look impressive, too:

- Over 70% of executives taking part in a 2017 PwC study believed that AI will impact every facet of business.

- On a similar note, Gartner estimates that the business value of Artificial Intelligence will reach $3.9 trillion (£3 billion), with about 44% of this being spent on decision support/augmentation use cases.

- McKinsey also predicts that AI has the potential to deliver global economic activity of around $13 trillion (£10 billion) by 2030.

It’s clear that AI has plenty of potential for business and it’s no wonder why; Deep Learning and computing power enable the automation of complex tasks, such as object detection, object classification, video and voice analytics, not to mention the growing natural learning processing sector.

But this is where the issues of accountability and explainability come into play. Businesses are held accountable for their actions. When these actions are determined by automated processes, companies need to be able to explain the process.

The Black Box Problem

In AI circles, this issue with explainability is known as the ‘black box’ problem. The best example of this phenomenon can be found in Deep Learning models, which can use millions of parameters and create extremely complex representations of the data sets they process.

We know that inputs, such as data, go into such a solution and we know that an answer or decision comes out. The middle is often unknown. Such issues can be found in very frequently in Neural Networks which, due to their complexity, are often called black box techniques. They can provide highly accurate predictions once they are trained with large data sets, but it is impossible for a human to understand the internal structure, features and representations of the data that the model uses to perform a particular task.

Solving this issue and introducing understanding is what AI explainability focuses on.

Why Do Explanations Matter?

First and foremost, let’s state clearly that there are situations where such black box solutions can operate.

This type of AI is acceptable for high-volume decision-making cases – if the consequences of failure are relatively benign – such as online recommendations (Netflix, Amazon, online adverts etc). Here, understanding how the internal algorithms work doesn’t matter, so long as they provide the expected results (for online adverts, this would be higher revenue, for example).

Since AI surrounds us everywhere, from smart wearables and home appliances to online recommendation engines, we’ve long accepted the fact that AI makes decisions about us and we don’t exactly know how these decisions were made – even when they’re unfair. We don’t care that Netflix’s Machine Learning recommended a horrible movie because the impact to our lives is, ultimately, minimal.

It’s where this risk isn’t minimal that we have problems with this lack of explainability. AI’s inability to explain its reasoning to human users is a huge barrier for AI adoption in such scenarios where the failure consequences are severe. This includes the likes of credit risk assessment or medical diagnostics, where there is a lot at stake.

In fact, it should go without saying (but we’re going to say it anyway): the higher the cost of poor decision-making, the greater the need for explainable AI.

Source: https://www.accenture.com/_acnmedia/pdf-85/accenture-understanding-machines-explainable-ai.pdf

Trust, Business and Regulators

These issues of trust are also important in various industries and organisations. In PwC’s 2017 Global CEO survey, 67% of respondents believed that AI and automation will negatively impact the trust stakeholders have in the industry.

It’s not just businesses that have noted these concerns, either. The European Union introduced a right to explanation in the General Data Protection Regulations (GDPR) in an attempt to deal with the potential problems caused from the rising use and importance of these AI algorithms.

We’ll get into the specific regulators and their legislation at a later date. For now, it’s enough to know that not only are businesses looking into this issue, there are regulatory bodies that are setting up clear, legal requirements from companies as well. On top of this, there’s also the customer or end-client as well.

Introducing AI Explainability

It’s certain that a clearer understanding of AI decisions is a must if their continued use and integration is to continue. Explainable AI (XAI) collectively refers to the methods and technologies developed to making machine learning interpretable enough that human users can understand the results of a specific solution.

XAI can take the form, for instance, of a data-level explanation focused on the reasoning behind a specific decision. A suitable example of this could be explaining a rejected credit application because “the application was rejected because the case is similar to 92% of rejected cases.”

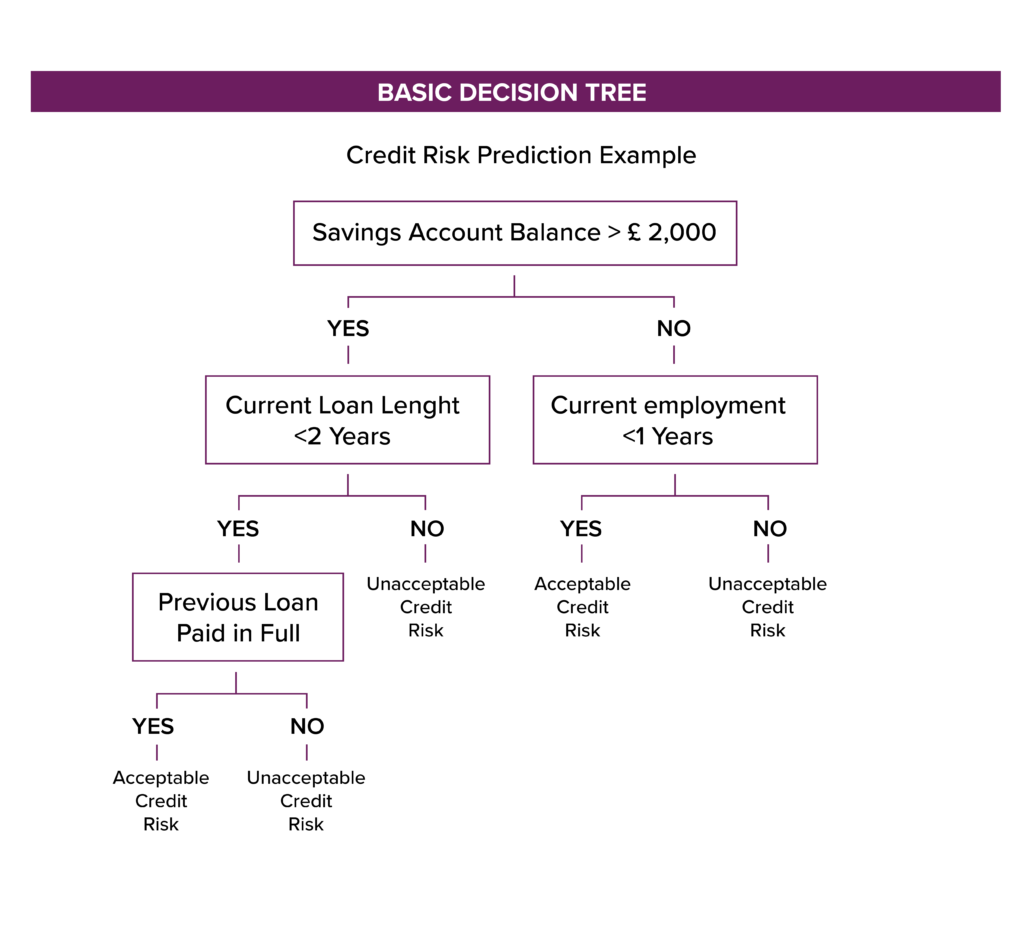

Alternatively, XAI can also provide model-level explanations, focusing on the algorithmic side of the solution. In these causes, XAI mimics the model by abstracting it and adding domain knowledge. Using the credit application example, again, this approach could determine that “The application is rejected because any application who has less than £2,000 in their savings account and whose current employment is shorter than 1 year is rejected.” This shows the clear logic of the model.

Who Needs AI Explainability – And Why?

Despite the above examples, it’s important to know that XAI is required by nearly all sides, not just the final customer. Furthermore, the perspectives and demands on the level AI interpretability vary depending on the group of users in question.

For example:

- Executives require appropriate measures for protecting the company from unintended consequences and reputational damage.

- Management need XAI to feel confident enough to deploy AI solutions for commercial use.

- Users need assurance that a respective AI solution is making the right decision.

- Both regulators and customers want to be sure that the technology is operating in line with ethical norms.

If it’s not clear already: everyone has a stake in XAI.

Likewise, here are a few real-world situations AI is finding itself in and how XAI can help.

Human Readability

In these cases, AI is being used to support decisions. Users of these systems aren’t looking for AI to collect all the data and make specific recommendations automatically. What they care about is the reasons behind the recommendations given and what they can do about it.

Here, the black box approach doesn’t work; XAI is essential. Implementing it will increase both the transparency and understanding of the business regarding its own AI services.

Decision Justification

There are also many situations where businesses need to provide justification for a decision. A prime example of this is firing (or even hiring) an employee. Thanks to GDPR, employees have the right to clear justification regarding any decision that involves them. If a company used Machine Learning in this process but has no understanding of how the algorithm came to its conclusions, it could be subject to legal consequences.

Avoiding Unintentional Discrimination

Because an AI’s learning is highly influenced on the data sets it is fed with, it’s possible that these Machine Learning models can pick up bias within the data – leading to biased or discriminatory outcomes.

The role of ethics in Machine Learning is increasingly important and we’ve already seen examples of unintentional discrimination in the real world. Studies in 2015, for example, famously found that searching for “CEO” on Google Images only showed women 11% of the time – when the actual real-world representation (at least in the US) was 27%. In contact, searching for ‘telemarketer’ favoured images of women (64%), even though such an occupation is evenly split.

Issues like this are not a conscious decision from the AI – it’s something inherent in the existing data. We could argue then, that one of XAI’s roles is to remove human bias from the process. We need Machine Learning to be better than us.

Facilitating Improvement

In various situations, Machine Learning can indicate or predict behaviour, but not provide enough information for the business to better act on it.

For example, in churn prediction, ML can readily mark customers who will leave with a very accurate prediction rate. However, even though the company can be sure they are going to leave, they have no idea why – or what the company can do to make them stay. They have no useful information on each specific customer to resolve the situation.

XAI can provide greater insight into this decision-making process, and hopefully provide an answer to the “why” part. This knowledge is the perfect start for organisations looking to understand the clients better – they can then take some form of decisive action to ensure that more customers stay.

In other situations, such as our own Mesh Twin Learning, we can readily implement ML to improve performance and make numerous micro-optimisations, but what if engineers want to use these findings to develop an expansion? Just like the finance sector, XAI is the answer.

Summary – What’s the current state of XAI?

If you think XAI is important now, just you wait. It’s clear that AI is going nowhere and companies that don’t introduce it have a significant risk of being left behind. So long as AI grows and makes more vital decisions, the critical importance of XAI will also only increase.

Next to the issue of explainability is accountability. When companies use AI, there’s always a question regarding who in the organisation is responsible. If AI is being used by departments that aren’t technologically focused, the executives at the top need to upskill their own knowledge regarding AI. After all, they need to be confident that any AI published through their department won’t come back to hurt the company itself.

In terms of AI explainability itself, many options are already available. If you’re using decision trees or more streamlined Machine Learning, it’s quite easy to show how decisions are made. When it comes to neural networks, too, we’re already opening up that black box and seeing what key factors drive a decision.

Sources

- Understanding Machines: Explainable AI [PDF]

- 3 GDPR Compliance Steps Explained

- Explainable Artificial Intelligence

- The First Woman Who Appears In A Google Image Search For CEO Is Barbie

- Robots Are Taking Over Human Jobs On Wall Street

- Gartner Estimates AI Business Value To Reach Nearly $4 Trillion By 2022

- Notes From The AI Frontier: Modeling The Impact Of AI On The World Economy

- Google’s New “Explainable AI” (xAI) Service